AI Provider

StartKit.AI is tested to work with all OpenAI chat models (gpt-4o, etc) and Anthropic chat models (Claude 3.5, etc).

You can also define your own custom models so that you can use your own models such as a self-hosted Llama instance.

If you’re new to AI then we recommend using OpenAI as it’s the easiest to get started with!

You can use OpenAI models with all of the StartKit.AI modules:

- Chat

- Images

- Text

- Embeddings

- Speech-to-Text

- Text-to-Speech

- Moderation

Creating an API key

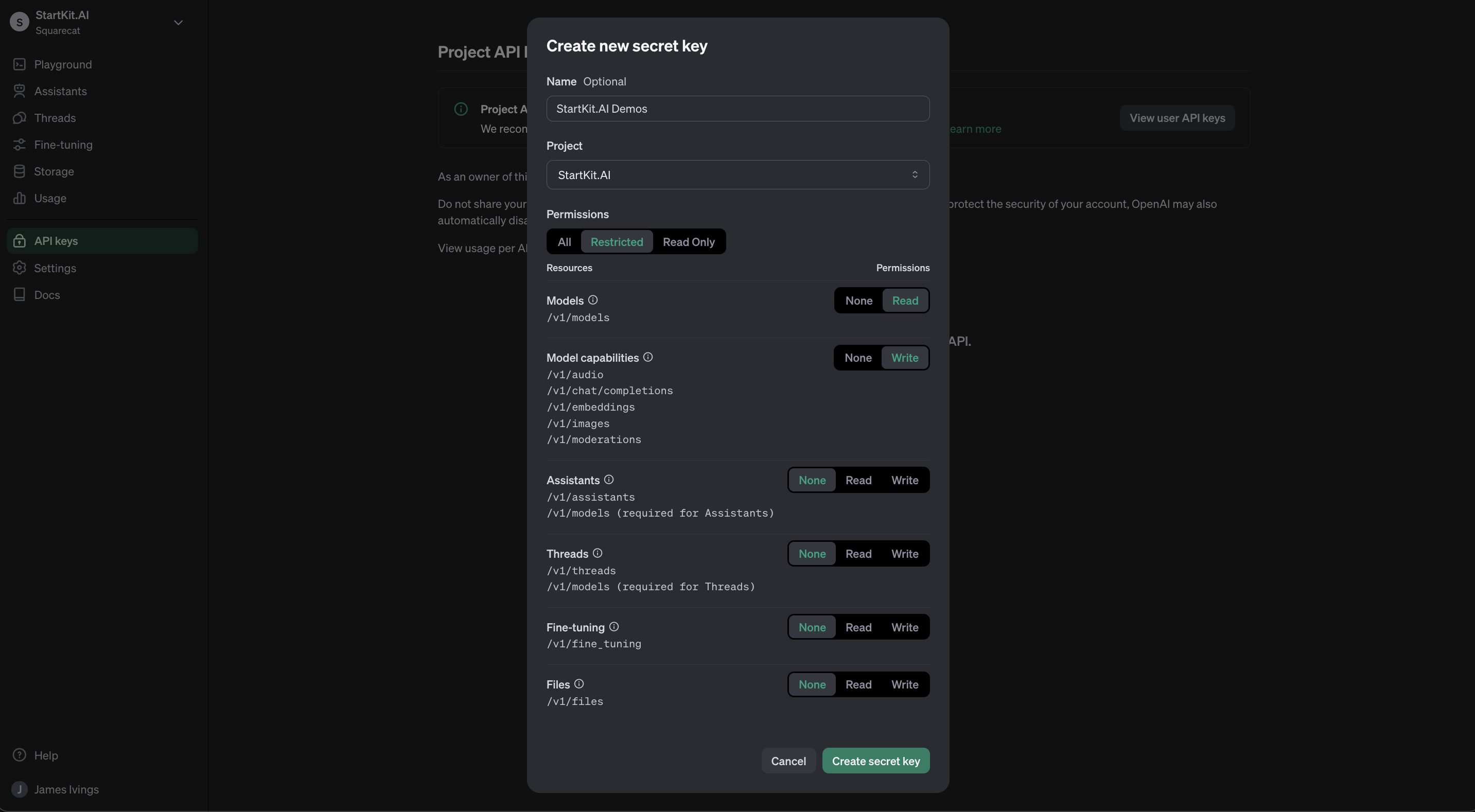

For this you will need an OpenAI API key. To get one sign up for an account on the OpenAI website, and then navigate to the API Keys page and click “Create new secret key”.

If you want to create a restricted key, StartKit.AI only requires access to the following resources:

Models- /v1/models

Model capabilities- /v1/audio- /v1/chat/completions- /v1/embeddings- /v1/images- /v1/moderations

Assistants- /v1/assistants- /v1/models (required for Assistants)

Threads- /v1/threads- /v1/models (required for Threads)

Fine-tuning- /v1/fine_tuning

Files- /v1/files

Config .env

Then copy the key into your StartKit.AI .env file:

OPENAI_KEY=# Organisation ID is optional, used to track in OpenAI# which organisation is making the requestsOPENAI_ORG_ID=You can use Anthropic models with the following StartKit.AI modules:

- Chat

- Embeddings

Config .env

Copy your Anthropic key into your StartKit.AI .env file:

ANTHROPIC_KEY=Now you will be able to include any Anthropic model in the chat.yml config file to have access to that model!

For example:

models: - claude-3-5-sonnet-20240620 - gpt-4o - custom/llama3.1To see a list of all available models check out the models page in the Admin Dashboard.

StartKit.AI should work with any custom model, you just need to define the details of it yourself.

See our Custom Models section for more details.

Using multiple providers

StartKit.AI also supports using multiple AI providers. In the configs/chat.yml file simply specify the models that you want to use. Each will be attempted in order if the previous one fails:

models: - claude-3-5-sonnet-20240620 - gpt-4o - custom/llama3.1Now we can get the rest of the external services setup. Starting with a MongoDB database in the next section